Organizations are constantly confronted with growing complexity in information management: dispersed knowledge bases, overlapping procedures, and fragmented data across countless systems.

Work smarter with AI, thanks to the Model Context Protocol (MCP)

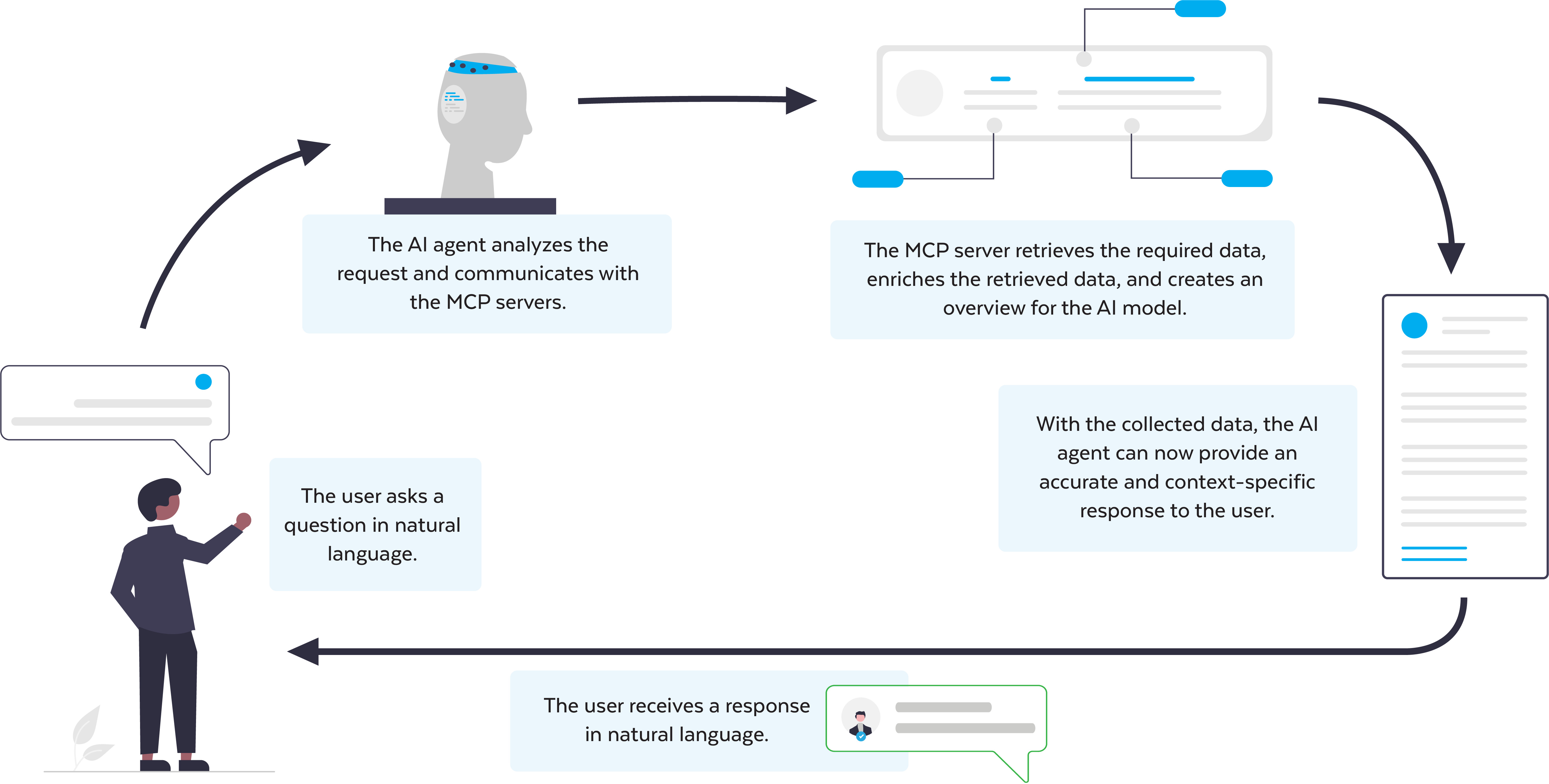

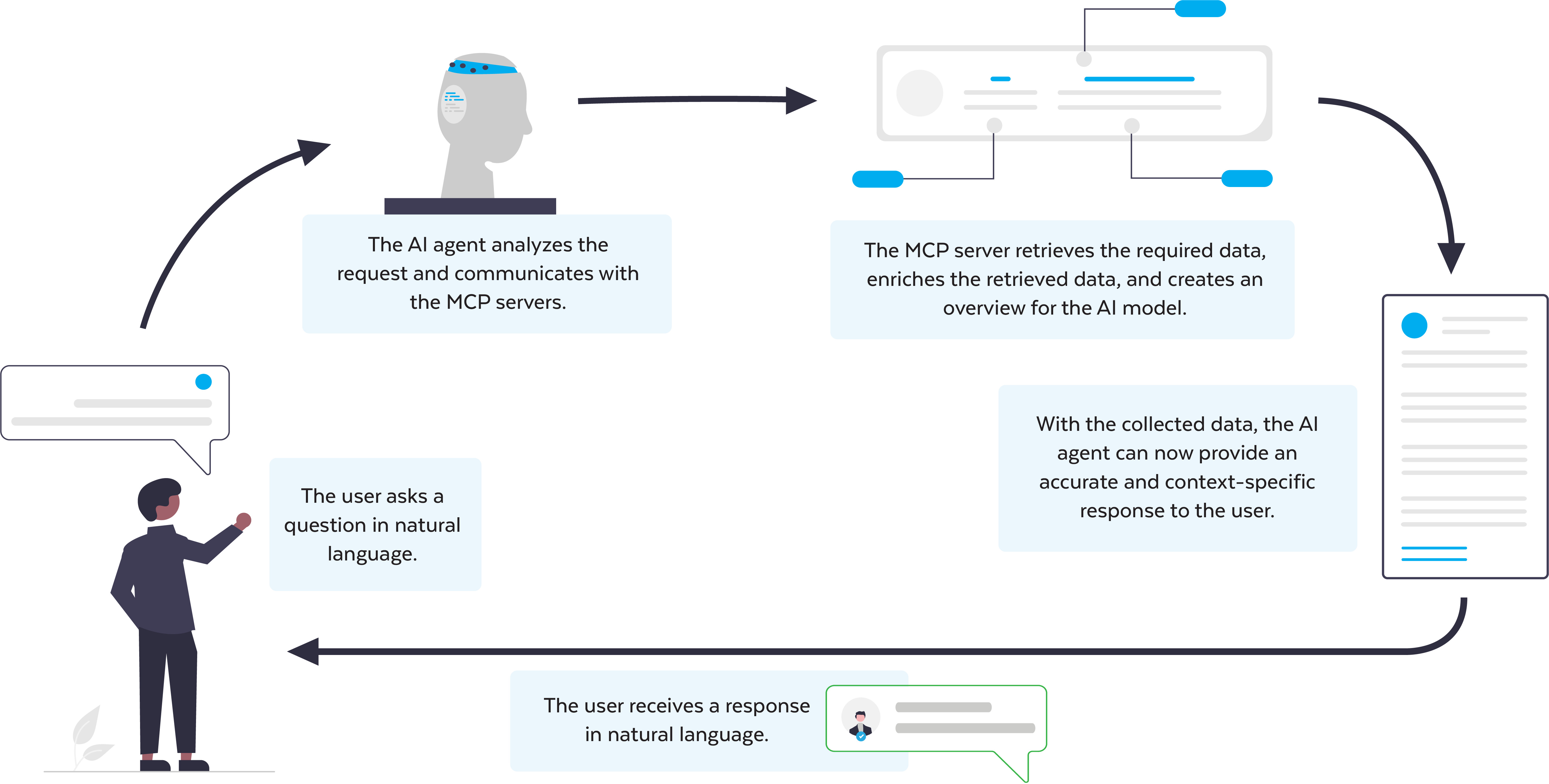

Large Language Models (LLMs) are advanced AI models that generate intelligent answers based on enormous text databases. However, they often lack up-to-date or company specific data. The Model Context Protocol (MCP) offers a solution: you provide structured supplementary data via MCP servers, your AI agent combines this with the LLM core to deliver context-aware output based on your data.

What is the MCP (Model Context Protocol)?

The Model Context Protocol (MCP) is an open-source standard for securely linking structured data, such as database records, wikis, and API information, to Large Language Models. MCP allows your chatbot to combine LLM language knowledge with your own information and deliver well-founded answers.

| Core principle |

Explanation |

| Standardized Data Model |

One uniform structure, meaning systems can communicate with each other without customization. |

| Context Elements |

Prompts (predefined questions), Tooling (API-calls, database-queries) en Resources (documents, schemas) are provided directly by the MCP server. |

| Robust Security |

Clear principles for security, privacy, and trust & safety (such as user consent and data privacy). |

| Scalable Design |

Works equally well in proof-of-concept and mission-critical production environments. |

This approach allows you to quickly, securely, and scalably obtain AI insights that are both smart and accurate. This illustration explains the MCP process flow:

MCP (Model Context Protocol) explained

How can companies use MCP?

Below are several concrete MCP examples. These practical cases demonstrate how companies are smartly deploying MCP in various business processes.

-

Secure database queries within the organization

With MCP, organizations can have queries about private databases like CRM or ERP answered without accessing sensitive data. The AI agent receives only what is needed, such as customer or product information, controlled by defined access rules. This enables direct and secure query responses, preventing data from falling into the wrong hands.

-

Generate graphs from your own data with MCP

Companies use MCP-based tools to automatically generate graphs from company data. The AI agent transforms queries like "Show sales by region" into graphs (bar, line, pie) based on live database values. This approach eliminates integration coding and makes data visualization simple and readily available.

-

Apply MCP to answer complex knowledge base questions

Suppose an employee asks a question about HR, taxes, or collective labor agreements. The MCP server retrieves relevant information from various internal knowledge bases, after which an LLM combines this data with laws and regulations. This way, the requester receives a clear, context-specific, and compliant answer, fully tailored to the organization and the individual.

Our experience with MCP servers

At Silicon Low Code, we have made our own source code and internal wikis accessible to all our employees via MCP. This enables us to:

- Design faster thanks to real-time access to project documentation;

- Develop more efficiently thanks to questions about APIs and modules answered directly via AI;

- Test reliably, with up-to-date specifications and bug fixes in a single chat interface.

Thanks to this setup, we save additional time on development cycles and increase the quality of our output.

Conclusion: The impact of the MCP (Model Context Protocol)

The Model Context Protocol transforms disparate data sources into a single, reliable knowledge layer, allowing LLMs to finally provide error-free, organization-specific answers. Companies that embrace MCP accelerate decision-making, reduce errors, and noticeably shorten lead times. Curious how you can achieve the same benefits?

Build your own MCP server

We have extensive practical experience and guide organizations in securely unlocking data for AI. This way, you combine the generic power of LLMs with your own knowledge base and increase innovation, efficiency, and reliability.

Interested? Request a demo now or schedule a technical intake meeting. Discover how MCP can help your organization work smarter, without setting up complex AI projects.

Get in touch now